Tl;dr, I’m using Duplicacy with the new Web UI. This is hosted in a docker image, and currently pushes data to an Azure storage account.

Also, wow, just had a slight heart-attack while writing this as I removed Docker from my NAS, which blew away a whole share of my Docker data (14 different containers including all my NextCloud personal files!). They were all backed up with Duplicacy, and while I had tested it before with a few files, you never know. It wasn’t as painless as I’d like – partially my fault with mounted drives to the container read only, partially the GUI isn’t super great yet, and really that Azure connections continually getting reset and the underlying CLI doesn’t account for that – but it’s all back and humming along again. Phew!

Options Considered

I’ve only included the main contenders below. In particular, I was interested in using non-proprietary storage backends that allowed me multiple options (B2, AWS, Azure, etc). The ones that were quickly removed and not tested:

Now for the ones that were tested.

CrashPlan

CrashPlan has served me great for a large number of years. I have used it from two different continents successfully. There are definitely some good things about it: continuous backup, dedupe at the block level, compression, and you can provide your own encryption key. However, with the changes awhile ago (and continual changes I get emailed about), I knew it was time to look for other options. Plus, even with 1 device, it was going jump from $50/year to $120 – while not horrible, definitely a motivator.

Synology’s Hyper Backup

I store most of my data on my Synology NAS, and it comes with some built in tools (Glacier Backup, Hyper Backup, and Cloud Sync). I actually was running CrashPlan in a docker image on the NAS prior to doing this assessment. Of the 3 tools, Hyper Backup was really the only one I consider as Glacier is for snapshots and Cloud Sync isn’t really a backup product. For Hyper Backup, you can backup to multiple different storage providers, including Azure which was my preferred. Like CrashPlan it can do dedupe at the block level, compression, and allows you to specify your own encryption. Unlike CrashPlan it isn’t continuous (can do hourly), will send failure emails, and won’t automatically include new folders in a root if only some of the subfolders are selected. The service is free, you only pay for the storage you use.

Duplicati

With Duplicati I ran it from a docker image on my NUC. This meant I had access to some files that Hyper Backup could not access, which was good. Plus, you can backup to multiple different storage providers including Azure. Like CrashPlan it can do dedupe at the block level, compression, and allows you to specify your own encryption. Unlike CrashPlan it isn’t continuous (can do hourly), and I was getting lots of errors when adding new folders. Plus the database is notorious for becoming corrupt, which is not something you want with your backups. The service is free, you only pay for the storage you use.

CloudBerry Linux

With CloudBerry I ran it from a docker image on my NUC. This meant I had access to some files that Hyper Backup could not access, which was good. Plus, you can backup to multiple different storage providers including Azure. Like CrashPlan it can do dedupe at the block level, compression, and allows you to specify your own encryption. Unlike CrashPlan it isn’t continuous (can do hourly), I could receive notification emails. One of the really neat features is that CloudBerry understands Azure storage tiers (hot, cold, and archive) and can manage the lifecycle with regards to those. However, while the files are encrypted in the blob storage (you can’t open them), they retain their folder structure and name. Additionally, the GUI isn’t great and I was getting a few errors. The service is not free ($30), and you pay for the storage you use.

Restic

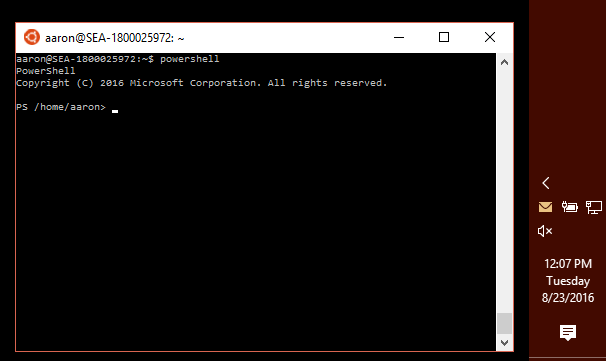

I tried to use restic, but wasn’t able to ever get it to work. I tried to run it in a docker, but the CLI and I just never go along (no GUI). It can use different storage providers including Azure, and it can dedupe and encrypt. However, it can’t compress, which means backups will be larger. The service is free, you only pay for the storage you use.

Duplicacy

With Duplicacy I ran it from a docker image on my NUC. The web-UI was still in beta when I was testing it, but fundamentally it met my needs, plus had a functional CLI (basically the UI just uses the CLI anyways). This meant I had access to some files that Hyper Backup could not access, which was good. Plus, you can backup to multiple different storage providers including Azure. Like CrashPlan it can do dedupe at the block level, compression, and allows you to specify your own encryption. Unlike CrashPlan it isn’t continuous (can do 15 minutely), but I could receive notification emails. It’s also blazingly fast and can do dedupe across machines if I was backing up more than one. The service is not free ($10), and you pay for the storage you use.

Choosing

For each of the ones listed above (except for Restic simply because I couldn’t get it to go), I setup test storage accounts on my Azure account and began backing up the same 50GB with each product. The key things I was looking for was: easy of use and setup, time to backup on an hourly basis, storage and transactions consumed to get an idea of ongoing costs, and any issues I ran into.

Duplicati was the first to go simply because of the errors I was getting with it backing up the files. However, it was fast at 1:02 min for the incremental hourly scan and upload.

CloudBerry Linux was the next to go. This was due to it being more expensive to run (storage costs), a few errors, it was second to last in speed at 1:23, and the folder/file names listed above.

HyperBackup stuck it out the longest. Out of the box, it was definitely one of the easiest to setup. However, it was also the slowest to scan and backup (probably due to it running on the NAS and not on my NUC) a 1:32, and was uploading more data than Duplicacy. In order to have multiple copies, Hyper Backup would have to run 2 separate jobs that do the exact same thing.

Duplicacy is what I am now using. It is incredibly fast (0:16 in the test, and only 2-5 mins every hour to scan and upload with my 900GB actual backups), and had the best cost usage for Azure. Additionally, I can easily clone to another online provider without having to rerun the drive scan, it just copies the new backup chunks. I have also setup a versioning solution that runs weekly to prune the hourly snapshots. This is based on the same pruning schedule that CrashPlan was using, and I’m seeing negligible storage increases month over month. The biggest risk is that this it is a newer piece of software that may have some bugs/issues. As mentioned in the tl;dr, my restore has taken way longer than it should’ve due to improper retries and timeouts with Azure (all the data is there though, and I can access it anywhere I install the Duplicacy CLI), but otherwise I’ve been very happy and have actually cancelled my CrashPlan account.

Note: Technically using Azure is more expensive than if I had stuck with CrashPlan. My monthly storage costs for my backups storage account is $15-20. However, with credits, it works out to $0 for me. Plus, I’m now in more control of my backups than I was before, and I can choose what storage provider I want to use to minimize costs.