I’ve been fighting this for awhile (as have a few others based on some google searches), and now that I have it resolved I figured I’d post it here.

High level, I’ve had a Surface Ergonomic Keyboard for awhile, and absolutely love it. However, recently I upgraded from a Surface Pro 5 to a Surface Pro 7 and the keyboard keeps going to sleep – taking forever to wake back up. I’ve been on calls, just hammering the windows key to get it to wake up. Needless to say it’s been super annoying as waiting for 30 seconds or more for your keyboard to start responding again is not ideal for productivity (or sanity).

I’ve seen a few places that I just need to turn off the “allow the computer to turn off this device to save power”. However, it took me a bit to figure out which one. Turns out it’s not until you select Change settings that you can see the Power Management tab in device hardware. So without further ado…

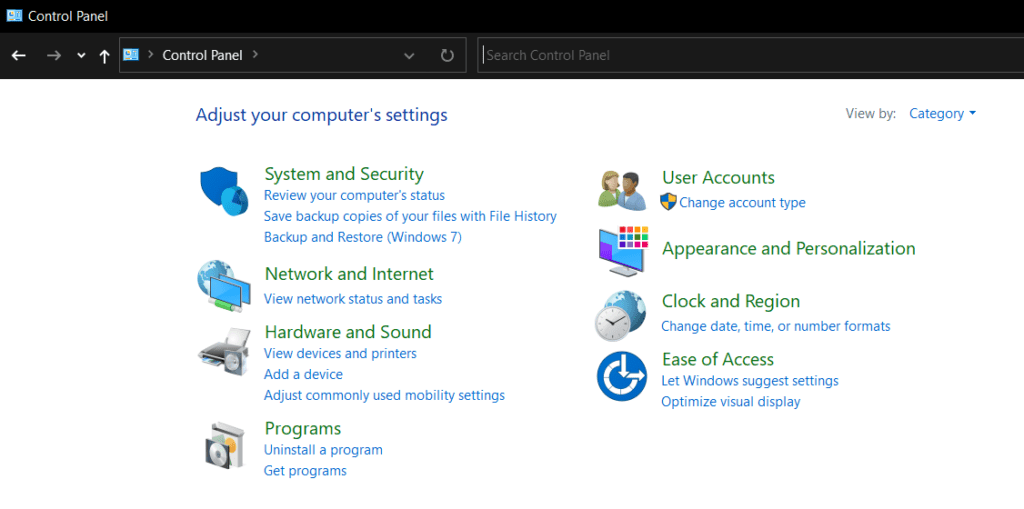

Open Control Panel

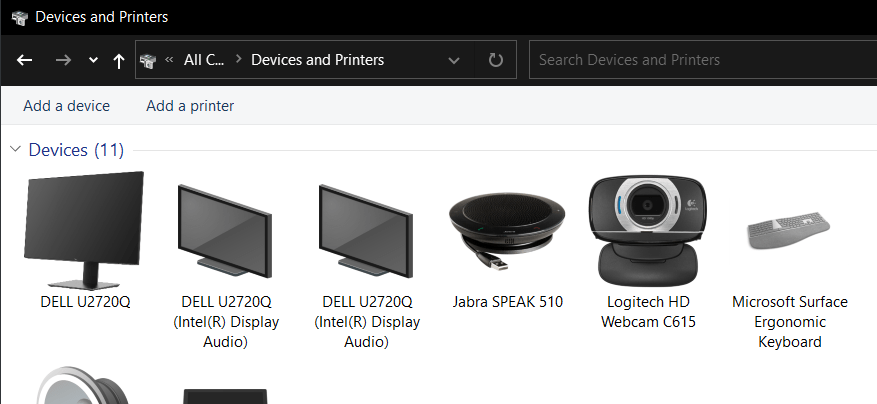

Select View devices and Printers (or if your control panel lists all the icons, select Devices and Printers).

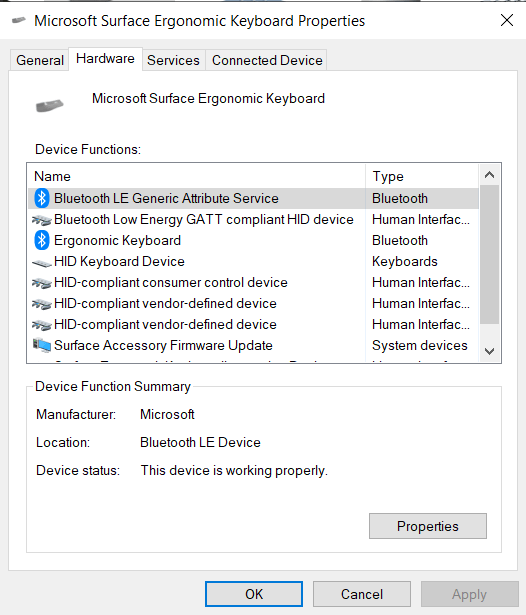

Select properties of the Ergonomic Keyboard and go to the Hardware tab

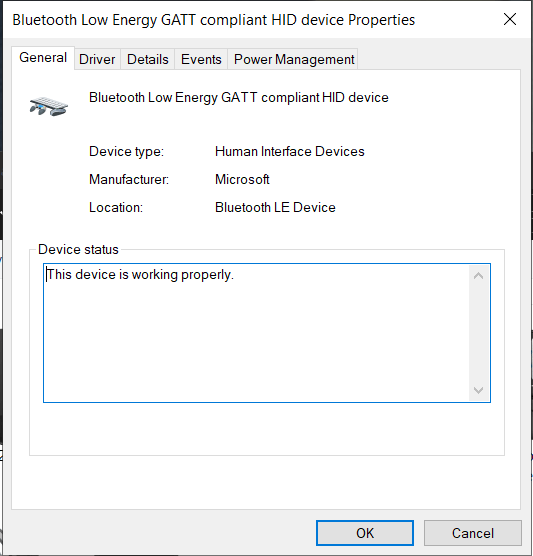

Select Bluetooth Low Energy GATT compliant HID device and select Properties

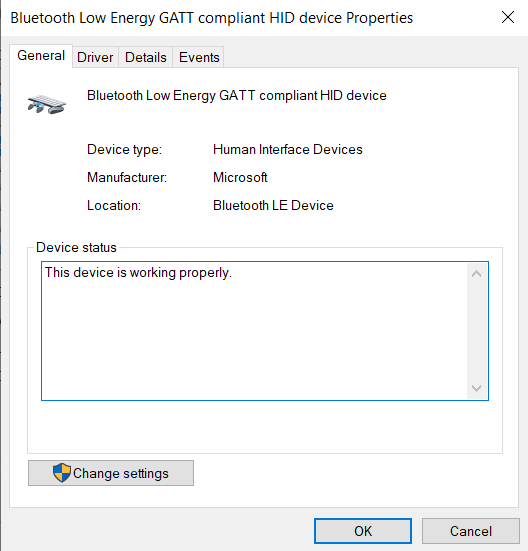

Click the Change settings button- tada Power Management tab!

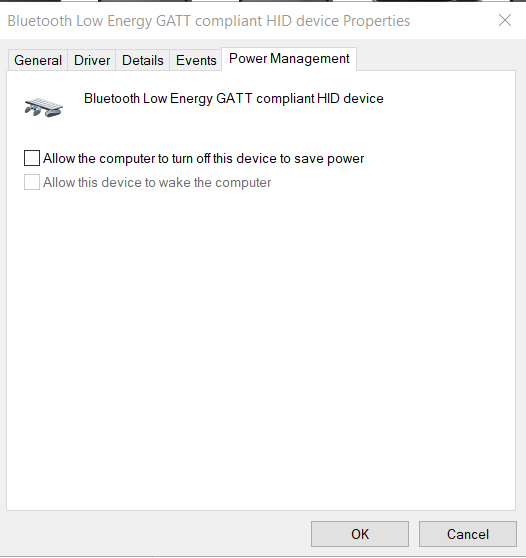

Select the Power Management tab, unselect Allow the computer to turn off this device to save power and click the OK buttons until you are back at the devices and printers screen. Yay, now it doesn’t go to sleep!

If for some reason you still don’t see the Power Management tab, you can do the following actions:

- Launch your Registry Editor (Windows button and type “Regedit“)

- Navigate to: “Computer\HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Power“

- Select the entry (or Create a DWORD (32-bit) Value) called ‘CsEnabled‘

- Change the “Value data” to “0” (Base ‘Hexadecimal‘) and select “OK“

- Reboot your machine

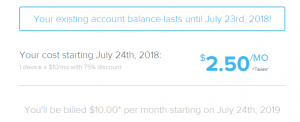

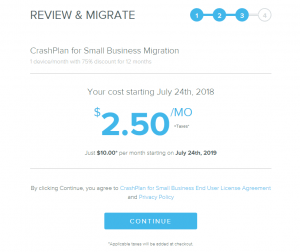

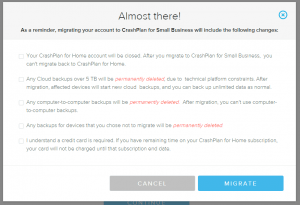

Boo, just got the email today that

Boo, just got the email today that