This may seem silly to some of you, but I am still getting used to Windows 2008. Sadly, I don’t spend as much time actually administering servers as I used to (silly management), so it usually takes me a bit longer to make my way around 2008 than 2003. I like to think they made everything more complex, but for some reason I’m sure I’ll get booed about that.

Anyways, this morning I was attempting to setup some performance alerts on some servers we’re having issues with. Basically I wanted to have it email us when it reached a certain threshold. No big deal, thinking I had this, I created the email app, created a performance counter, and then manually added it in.

Needless to say that didn’t work. It took me awhile to figure out why too as my little email utility worked fine. So I began a new search in order to find out how stupid I was being.

Turns out, quite a lot of stupid. Instead of using the utility, you can now use scheduled task items…which includes an email action! I basically used the instructions over at Sam Martin’s blog, which, I may add, he posted about in April of this year. I’m not the only n00b. Plus, who doesn’t have an enterprise system that deals with this sort of stuff already (at least at the types of clients I work with)?

Perfmon

- Open up perfmon

- Create a new User Defined Data Collector Set

- Choose to create manually after naming

- Select Performance Counter Alert

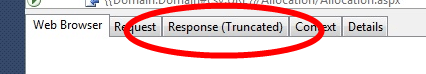

- Add in the performance counter you care about (mine was requests executing in asp.net apps 4.0)

- Choose the user to run it as

- Edit the Data Collector in the Data collector set

- Change the sample interval to whatever works for you (I set mine to 60s so we can be on top of issues prior to the users)

- Under Alert task, give it a name (e.g. EmailAlert) and give it a task argument (you can combine them to form a sentence like “the value at {date} was {value}”

- Start the Data Collector Set

- Open up scheduled tasks

- Create a task, not a basic task

- Name it the exact same name you did in step 9 above (i.e. EmailAlert)

- Check “user is logged in or not” so that it runs all the time

- Create a new email action under the Action tab

- Enter all the info for from, to, subject, etc. To send to multiple people, comma separate the addresses.

- For the body, type whatever you want, and then $(Arg0) will pass the task argument you made in step 9 above.

- Enter the SMTP server.

Done!

Since the performance counter was set to an application pool, whenever that pool disappears (IISReset, idle timeout, etc.) the counter stops.

Currently Reading (could take awhile): [amazon_image id=”B000QCS8TW” link=”true” target=”_blank” size=”medium” ]A Game of Thrones: A Song of Ice and Fire: Book One[/amazon_image]